2025

"Hands-on" Light Field Display via Engineering Scalable Optics, Algorithms, and Mounts

Feifan Qu, Pengfei Shen, Li Liao, Yifan Peng

SIGGRAPH Asia 2025 XR 2025

We present a hands-on light field display for shared XR to address the isolation of head-mounted displays. Using low-cost, off-the-shelf components, it projects a light field to create vivid 3D scenes with continuous parallax and integrates gesture tracking for intuitive interaction.

"Hands-on" Light Field Display via Engineering Scalable Optics, Algorithms, and Mounts

Feifan Qu, Pengfei Shen, Li Liao, Yifan Peng

SIGGRAPH Asia 2025 XR 2025

We present a hands-on light field display for shared XR to address the isolation of head-mounted displays. Using low-cost, off-the-shelf components, it projects a light field to create vivid 3D scenes with continuous parallax and integrates gesture tracking for intuitive interaction.

S³ Imagery: Specular Shading from Scratch-Anisotropy

Pengfei Shen, Feifan Qu, Li Liao, Ruizhen Hu, Yifan Peng

ACM SIGGRAPH Asia 2025 Conference Papers 2025

We explore creating continuous 3D virtual objects with view-dependent shading effects through scratch-based reflection on both flat and curved surfaces. We solve the ordinary differential equations under constraints calculated from established bidirectional reflectance distribution function models to optimize scratch distribution on substrate surfaces. We manufactured physical prototypes using carving machines, achieving specular imagery with realistic shading effects.

S³ Imagery: Specular Shading from Scratch-Anisotropy

Pengfei Shen, Feifan Qu, Li Liao, Ruizhen Hu, Yifan Peng

ACM SIGGRAPH Asia 2025 Conference Papers 2025

We explore creating continuous 3D virtual objects with view-dependent shading effects through scratch-based reflection on both flat and curved surfaces. We solve the ordinary differential equations under constraints calculated from established bidirectional reflectance distribution function models to optimize scratch distribution on substrate surfaces. We manufactured physical prototypes using carving machines, achieving specular imagery with realistic shading effects.

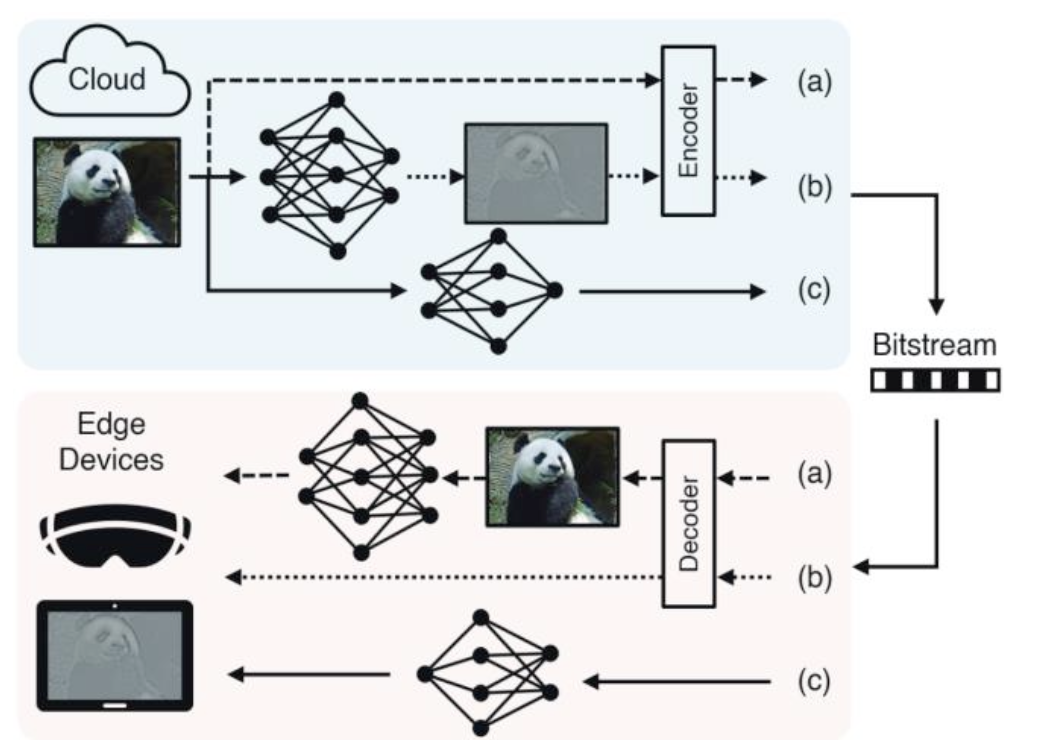

83‐3: Neural Network‐Empowered Hologram Compression for Computational Near‐Eye Displays

Hyunmin Ban, Wenbin Zhou, Xiangyu Meng, Feifan Qu, Yifan Peng

SID Symposium Digest of Technical Papers 2025

Holography enhances VR and AR displays by providing realistic 3D imagery, but current computer‐generated hologram (CGH) algorithms face high computational demands. This work presents an efficient hologram generation and compression method using a pre‐trained wave propagation model and a filter‐free design. The approach reduces data redundancy, simplifies hardware, and achieves near real‐time decoding, enabling practical use in compact AR/VR systems.

83‐3: Neural Network‐Empowered Hologram Compression for Computational Near‐Eye Displays

Hyunmin Ban, Wenbin Zhou, Xiangyu Meng, Feifan Qu, Yifan Peng

SID Symposium Digest of Technical Papers 2025

Holography enhances VR and AR displays by providing realistic 3D imagery, but current computer‐generated hologram (CGH) algorithms face high computational demands. This work presents an efficient hologram generation and compression method using a pre‐trained wave propagation model and a filter‐free design. The approach reduces data redundancy, simplifies hardware, and achieves near real‐time decoding, enabling practical use in compact AR/VR systems.

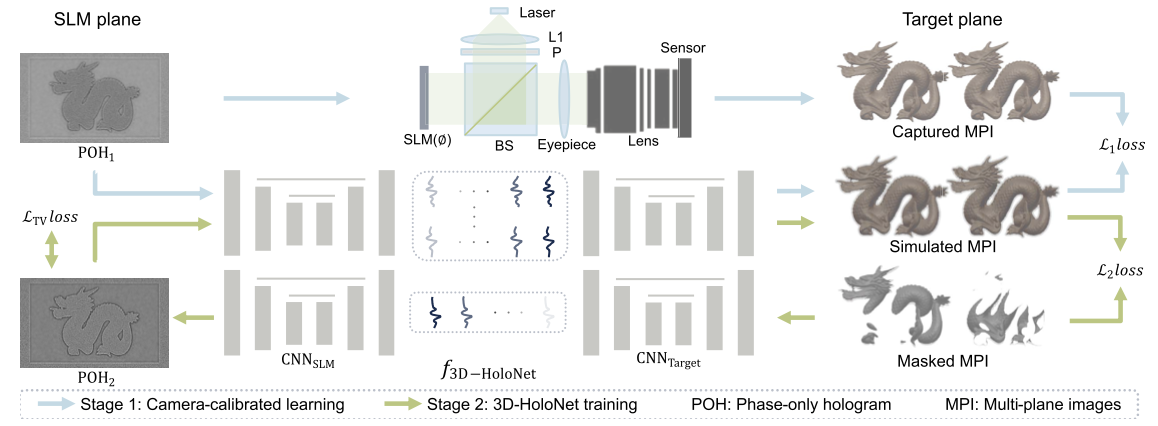

83‐4: Invited Paper: Filter‐Free 3D HoloNet with Hardware‐Aware Calibration

Feifan Qu, Wenbin Zhou, Xiangyu Meng, Zhenyang Li, Hyunmin Ban, Yifan Peng

SID Symposium Digest of Technical Papers 2025

Computational holography faces challenges in balancing speed and quality, especially for 3D content. This work presents 3D‐HoloNet, a deep learning framework that generate phase‐only holograms from RGB‐D scenes in real time. The method integrates a learned wave propagation model calibrated to physical displays and a phase regularization strategy, enabling robust performance under hardware imperfections. Experiments show the system achieves 30 fps at full‐HD resolution (single‐color channel) on consumer GPUs while matching iterative methods in reconstruction quality across multiple focal planes. By eliminating iterative optimization and physical filtering, 3D‐HoloNet addresses the critical speed‐quality trade‐off in unfiltered holographic displays.

83‐4: Invited Paper: Filter‐Free 3D HoloNet with Hardware‐Aware Calibration

Feifan Qu, Wenbin Zhou, Xiangyu Meng, Zhenyang Li, Hyunmin Ban, Yifan Peng

SID Symposium Digest of Technical Papers 2025

Computational holography faces challenges in balancing speed and quality, especially for 3D content. This work presents 3D‐HoloNet, a deep learning framework that generate phase‐only holograms from RGB‐D scenes in real time. The method integrates a learned wave propagation model calibrated to physical displays and a phase regularization strategy, enabling robust performance under hardware imperfections. Experiments show the system achieves 30 fps at full‐HD resolution (single‐color channel) on consumer GPUs while matching iterative methods in reconstruction quality across multiple focal planes. By eliminating iterative optimization and physical filtering, 3D‐HoloNet addresses the critical speed‐quality trade‐off in unfiltered holographic displays.

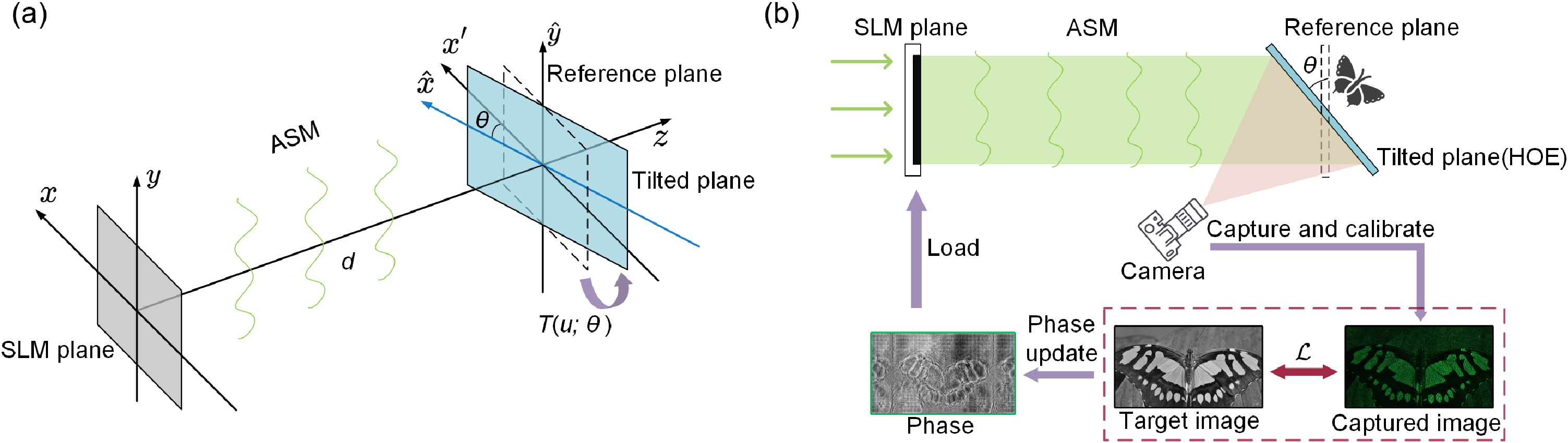

Off-axis holographic augmented reality displays with HOE-empowered and camera-calibrated propagation

Xinxing Xia, Daqiang Ma, Xiangyu Meng, Feifan Qu, Huadong Zheng, Yingjie Yu, Yifan Peng

OPTICA Photonics Research 2025

Holographic near-eye augmented reality (AR) displays featuring tilted inbound/outbound angles on compact optical combiners hold significant potential yet often struggle to deliver satisfying image quality. This is primarily attributed to two reasons: the lack of a robust off-axis-supported phase hologram generation algorithm; and the suboptimal performance of ill-tuned hardware parts such as imperfect holographic optical elements (HOEs). To address these issues, we incorporate a gradient descent-based phase retrieval algorithm with spectrum remapping, allowing for precise hologram generation with wave propagation between nonparallel planes. Further, we apply a camera-calibrated propagation scheme to iteratively optimize holograms, mitigating imperfections arising from the defects in the HOE fabrication process and other hardware parts, thereby significantly lifting the holographic image quality. We build an off-axis holographic near-eye display prototype using off-the-shelf light engine parts and a customized full-color HOE, demonstrating state-of-the-art virtual reality and AR display results.

Off-axis holographic augmented reality displays with HOE-empowered and camera-calibrated propagation

Xinxing Xia, Daqiang Ma, Xiangyu Meng, Feifan Qu, Huadong Zheng, Yingjie Yu, Yifan Peng

OPTICA Photonics Research 2025

Holographic near-eye augmented reality (AR) displays featuring tilted inbound/outbound angles on compact optical combiners hold significant potential yet often struggle to deliver satisfying image quality. This is primarily attributed to two reasons: the lack of a robust off-axis-supported phase hologram generation algorithm; and the suboptimal performance of ill-tuned hardware parts such as imperfect holographic optical elements (HOEs). To address these issues, we incorporate a gradient descent-based phase retrieval algorithm with spectrum remapping, allowing for precise hologram generation with wave propagation between nonparallel planes. Further, we apply a camera-calibrated propagation scheme to iteratively optimize holograms, mitigating imperfections arising from the defects in the HOE fabrication process and other hardware parts, thereby significantly lifting the holographic image quality. We build an off-axis holographic near-eye display prototype using off-the-shelf light engine parts and a customized full-color HOE, demonstrating state-of-the-art virtual reality and AR display results.

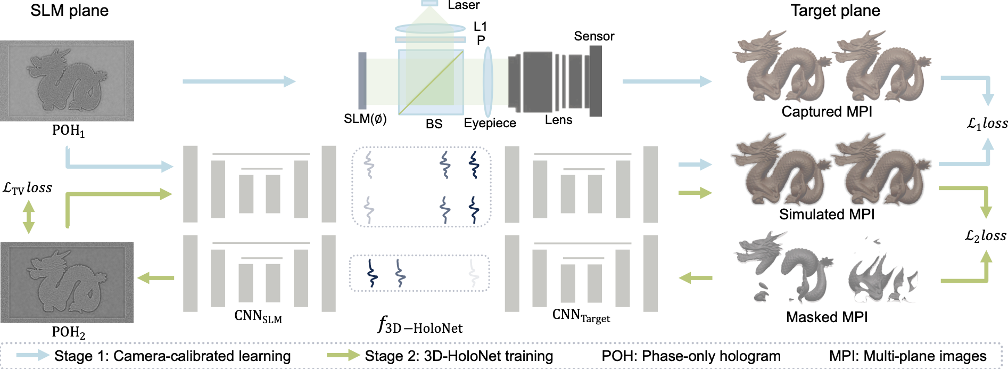

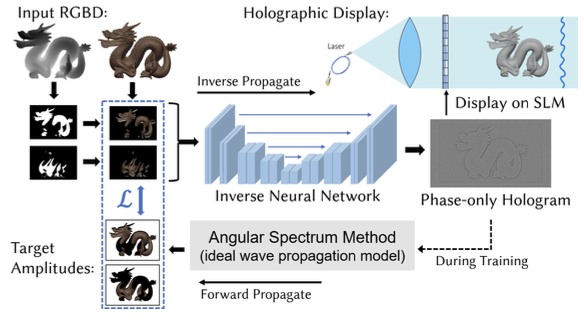

3D-HoloNet: fast, unfiltered, 3D hologram generation with camera-calibrated network learning

Wenbin Zhou*, Feifan Qu*, Xiangyu Meny, Zhenyang Li, Yifan Peng (* equal contribution)

OPTICA Optics Letters 2025

Computational holographic displays typically rely on time-consuming iterative computer-generated holographic (CGH) algorithms and bulky physical filters to attain high-quality reconstruction images. This trade-off between inference speed and image quality becomes more pronounced when aiming to realize 3D holographic imagery. This work presents 3D-HoloNet, a deep neural network-empowered CGH algorithm for generating phase-only holograms (POHs) of 3D scenes, represented as RGB-D images, in real time. The proposed scheme incorporates a learned, camera-calibrated wave propagation model and a phase regularization prior into its optimization. This unique combination allows for accommodating practical, unfiltered holographic display setups that may be corrupted by various hardware imperfections. Results tested on an unfiltered holographic display reveal that the proposed 3D-HoloNet can achieve 30 fps at full HD for one color channel using a consumer-level GPU while maintaining image quality comparable to iterative methods across multiple focused distances.

3D-HoloNet: fast, unfiltered, 3D hologram generation with camera-calibrated network learning

Wenbin Zhou*, Feifan Qu*, Xiangyu Meny, Zhenyang Li, Yifan Peng (* equal contribution)

OPTICA Optics Letters 2025

Computational holographic displays typically rely on time-consuming iterative computer-generated holographic (CGH) algorithms and bulky physical filters to attain high-quality reconstruction images. This trade-off between inference speed and image quality becomes more pronounced when aiming to realize 3D holographic imagery. This work presents 3D-HoloNet, a deep neural network-empowered CGH algorithm for generating phase-only holograms (POHs) of 3D scenes, represented as RGB-D images, in real time. The proposed scheme incorporates a learned, camera-calibrated wave propagation model and a phase regularization prior into its optimization. This unique combination allows for accommodating practical, unfiltered holographic display setups that may be corrupted by various hardware imperfections. Results tested on an unfiltered holographic display reveal that the proposed 3D-HoloNet can achieve 30 fps at full HD for one color channel using a consumer-level GPU while maintaining image quality comparable to iterative methods across multiple focused distances.

2024

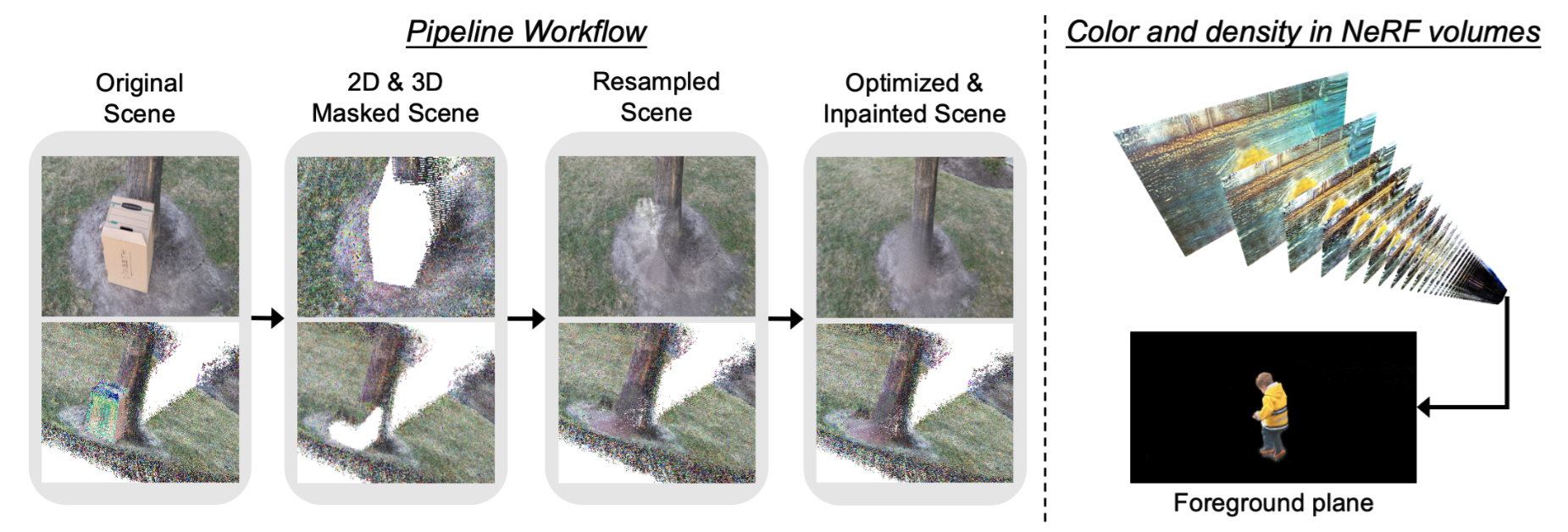

Point Resampling and Ray Transformation Aid to Editable NeRF Models

Zhenyang Li, Zilong Chen, Feifan Qu, Mingqing Wang, Yizhou Zhao, Kai Zhang, Yifan Peng

arXiv preprint 2024

In NeRF-aided editing tasks, object movement presents difficulties in supervision generation due to the introduction of variability in object positions. Moreover, the removal operations of certain scene objects often lead to empty regions, presenting challenges for NeRF models in inpainting them effectively. We propose an implicit ray transformation strategy, allowing for direct manipulation of the 3D object's pose by operating on the neural-point in NeRF rays. To address the challenge of inpainting potential empty regions, we present a plug-and-play inpainting module, dubbed differentiable neural-point resampling (DNR), which interpolates those regions in 3D space at the original ray locations within the implicit space, thereby facilitating object removal & scene inpainting tasks. Importantly, employing DNR effectively narrows the gap between ground truth and predicted implicit features, potentially increasing the mutual information (MI) of the features across rays. Then, we leverage DNR and ray transformation to construct a point-based editable NeRF pipeline PR^2T-NeRF. Results primarily evaluated on 3D object removal & inpainting tasks indicate that our pipeline achieves state-of-the-art performance. In addition, our pipeline supports high-quality rendering visualization for diverse editing operations without necessitating extra supervision.

Point Resampling and Ray Transformation Aid to Editable NeRF Models

Zhenyang Li, Zilong Chen, Feifan Qu, Mingqing Wang, Yizhou Zhao, Kai Zhang, Yifan Peng

arXiv preprint 2024

In NeRF-aided editing tasks, object movement presents difficulties in supervision generation due to the introduction of variability in object positions. Moreover, the removal operations of certain scene objects often lead to empty regions, presenting challenges for NeRF models in inpainting them effectively. We propose an implicit ray transformation strategy, allowing for direct manipulation of the 3D object's pose by operating on the neural-point in NeRF rays. To address the challenge of inpainting potential empty regions, we present a plug-and-play inpainting module, dubbed differentiable neural-point resampling (DNR), which interpolates those regions in 3D space at the original ray locations within the implicit space, thereby facilitating object removal & scene inpainting tasks. Importantly, employing DNR effectively narrows the gap between ground truth and predicted implicit features, potentially increasing the mutual information (MI) of the features across rays. Then, we leverage DNR and ray transformation to construct a point-based editable NeRF pipeline PR^2T-NeRF. Results primarily evaluated on 3D object removal & inpainting tasks indicate that our pipeline achieves state-of-the-art performance. In addition, our pipeline supports high-quality rendering visualization for diverse editing operations without necessitating extra supervision.

59-4: Towards Real-time 3D Computer-Generated Holography with Inverse Neural Network for Near-eye Displays

Wenbin Zhou, Xiangyu Meng, Feifan Qu, Yifan Peng

SID Symposium Digest of Technical Papers 2024

Holography plays a vital role in the advancement of virtual reality (VR) and augmented reality (AR) display technologies. Its ability to create realistic three‐dimensional (3D) imagery is crucial for providing immersive experiences to users. However, existing computer‐generated holography (CGH) algorithms used in these technologies are either slow or not 3D‐compatible. This article explores four inverse neural network architectures to overcome these issues for real time and 3D applications.

59-4: Towards Real-time 3D Computer-Generated Holography with Inverse Neural Network for Near-eye Displays

Wenbin Zhou, Xiangyu Meng, Feifan Qu, Yifan Peng

SID Symposium Digest of Technical Papers 2024

Holography plays a vital role in the advancement of virtual reality (VR) and augmented reality (AR) display technologies. Its ability to create realistic three‐dimensional (3D) imagery is crucial for providing immersive experiences to users. However, existing computer‐generated holography (CGH) algorithms used in these technologies are either slow or not 3D‐compatible. This article explores four inverse neural network architectures to overcome these issues for real time and 3D applications.