PhD Student @ HKU

PhD Student @ HKUI am a first year PhD student in the Department of Electrical and Electronic Engineering at The University of Hong Kong. Currently, I am conducting research in the Computational Imaging & Mixed Representation Laboratory (WeLight@HKU) led by Dr. Evan Y. Peng.

I completed my bachelor's degree in Electronic Information Engineering at Xidian University, and further pursued a master's degree in Electrical and Electronic Engineering at The University of Hong Kong under the supervision of Dr. Evan Y. Peng.

My current academic interests revolve around the areas of Holography, Computer Graphics, and XR.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

The University of Hong KongPhd in Electrical and Electronic EngineeringSep 2025 - Present

The University of Hong KongPhd in Electrical and Electronic EngineeringSep 2025 - Present -

The University of Hong KongMSc in Electrical and Electronic EngineeringSep 2022 - Dec 2023

The University of Hong KongMSc in Electrical and Electronic EngineeringSep 2022 - Dec 2023 -

Xidian University &

Xidian University & -

École Polytechnique de l'Université de NantesJoint Bachelor's Program in Electronic Information EngineeringSep 2018 - Jul 2022

École Polytechnique de l'Université de NantesJoint Bachelor's Program in Electronic Information EngineeringSep 2018 - Jul 2022

Experience

-

The University of Hong KongResearch AssistantFeb 2024 - PresentStudent ResearcherSep 2022 - Aug 2023

The University of Hong KongResearch AssistantFeb 2024 - PresentStudent ResearcherSep 2022 - Aug 2023 -

Xidian UniversityUndergraduate Research AssistantSep 2021 - June 2022Undergraduate ResearcherSep 2019 - Sep 2021

Xidian UniversityUndergraduate Research AssistantSep 2021 - June 2022Undergraduate ResearcherSep 2019 - Sep 2021

Extracurricular Activities

-

The University of Hong KongLab Activity CoordinatorFeb 2024 - Present

The University of Hong KongLab Activity CoordinatorFeb 2024 - Present -

Xidian UniversitySecretary of the Communist Youth League BranchSep 2018 - Jun 2022Director of Outreach Department in Student UnionSep 2019 - Sep 2020

Xidian UniversitySecretary of the Communist Youth League BranchSep 2018 - Jun 2022Director of Outreach Department in Student UnionSep 2019 - Sep 2020 -

Hebei Zhengding High SchoolClass LeaderSep 2015 - Jun 2018Academic RepresentativeSep 2015 - Jun 2018President of the Club UnionSep 2016 - Jun 2017President of Student UnionSep 2016 - Jun 2017

Hebei Zhengding High SchoolClass LeaderSep 2015 - Jun 2018Academic RepresentativeSep 2015 - Jun 2018President of the Club UnionSep 2016 - Jun 2017President of Student UnionSep 2016 - Jun 2017

Honors & Awards

-

SID Display Week 2025 Student Travel Grant2025

-

HKU EEE Distinguished Dissertation Scholarship2023

-

The Funding Scheme for Student Projects/Activities in InnoWing2023

-

Xidian University Academic Performance Scholarship2021

-

The National Challenge Cup Competition of University Students Tier 22021

-

The Internet Plus Program on Innovation & Entrepreneurship of University Students Tier 12021

-

China-France Inspirational Schooling Scholarship2020

-

Outstanding Secretary of the Communist Youth League Branch2019

News

Selected Publications (view all )

"Hands-on" Light Field Display via Engineering Scalable Optics, Algorithms, and Mounts

Feifan Qu, Pengfei Shen, Li Liao, Yifan Peng

SIGGRAPH Asia 2025 XR 2025

We present a hands-on light field display for shared XR to address the isolation of head-mounted displays. Using low-cost, off-the-shelf components, it projects a light field to create vivid 3D scenes with continuous parallax and integrates gesture tracking for intuitive interaction.

"Hands-on" Light Field Display via Engineering Scalable Optics, Algorithms, and Mounts

Feifan Qu, Pengfei Shen, Li Liao, Yifan Peng

SIGGRAPH Asia 2025 XR 2025

We present a hands-on light field display for shared XR to address the isolation of head-mounted displays. Using low-cost, off-the-shelf components, it projects a light field to create vivid 3D scenes with continuous parallax and integrates gesture tracking for intuitive interaction.

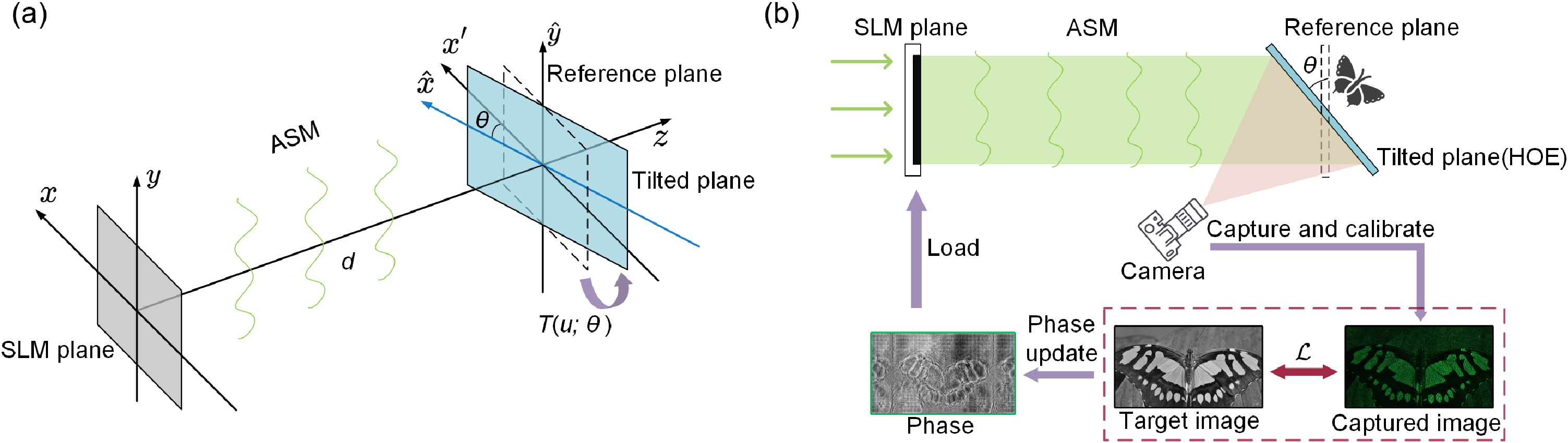

Off-axis holographic augmented reality displays with HOE-empowered and camera-calibrated propagation

Xinxing Xia, Daqiang Ma, Xiangyu Meng, Feifan Qu, Huadong Zheng, Yingjie Yu, Yifan Peng

OPTICA Photonics Research 2025

Holographic near-eye augmented reality (AR) displays featuring tilted inbound/outbound angles on compact optical combiners hold significant potential yet often struggle to deliver satisfying image quality. This is primarily attributed to two reasons: the lack of a robust off-axis-supported phase hologram generation algorithm; and the suboptimal performance of ill-tuned hardware parts such as imperfect holographic optical elements (HOEs). To address these issues, we incorporate a gradient descent-based phase retrieval algorithm with spectrum remapping, allowing for precise hologram generation with wave propagation between nonparallel planes. Further, we apply a camera-calibrated propagation scheme to iteratively optimize holograms, mitigating imperfections arising from the defects in the HOE fabrication process and other hardware parts, thereby significantly lifting the holographic image quality. We build an off-axis holographic near-eye display prototype using off-the-shelf light engine parts and a customized full-color HOE, demonstrating state-of-the-art virtual reality and AR display results.

Off-axis holographic augmented reality displays with HOE-empowered and camera-calibrated propagation

Xinxing Xia, Daqiang Ma, Xiangyu Meng, Feifan Qu, Huadong Zheng, Yingjie Yu, Yifan Peng

OPTICA Photonics Research 2025

Holographic near-eye augmented reality (AR) displays featuring tilted inbound/outbound angles on compact optical combiners hold significant potential yet often struggle to deliver satisfying image quality. This is primarily attributed to two reasons: the lack of a robust off-axis-supported phase hologram generation algorithm; and the suboptimal performance of ill-tuned hardware parts such as imperfect holographic optical elements (HOEs). To address these issues, we incorporate a gradient descent-based phase retrieval algorithm with spectrum remapping, allowing for precise hologram generation with wave propagation between nonparallel planes. Further, we apply a camera-calibrated propagation scheme to iteratively optimize holograms, mitigating imperfections arising from the defects in the HOE fabrication process and other hardware parts, thereby significantly lifting the holographic image quality. We build an off-axis holographic near-eye display prototype using off-the-shelf light engine parts and a customized full-color HOE, demonstrating state-of-the-art virtual reality and AR display results.

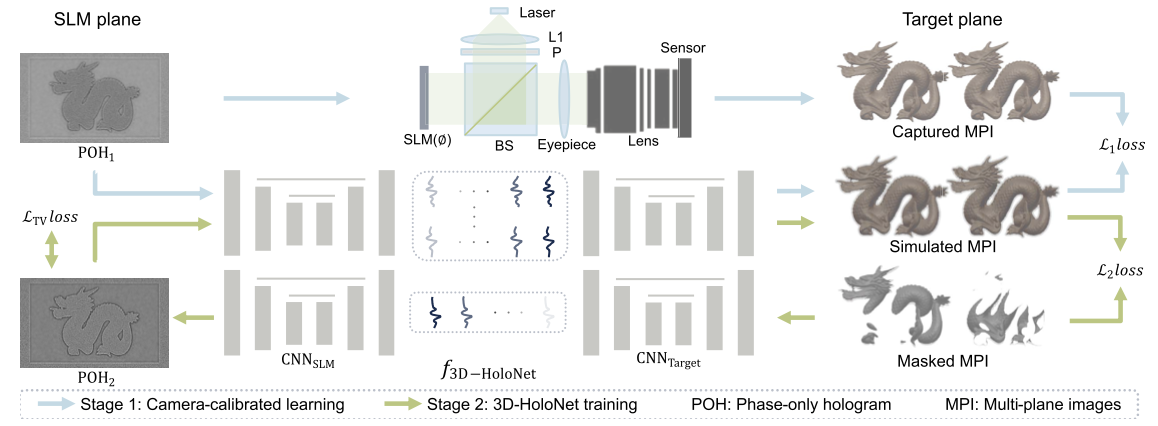

3D-HoloNet: fast, unfiltered, 3D hologram generation with camera-calibrated network learning

Wenbin Zhou*, Feifan Qu*, Xiangyu Meny, Zhenyang Li, Yifan Peng (* equal contribution)

OPTICA Optics Letters 2025

Computational holographic displays typically rely on time-consuming iterative computer-generated holographic (CGH) algorithms and bulky physical filters to attain high-quality reconstruction images. This trade-off between inference speed and image quality becomes more pronounced when aiming to realize 3D holographic imagery. This work presents 3D-HoloNet, a deep neural network-empowered CGH algorithm for generating phase-only holograms (POHs) of 3D scenes, represented as RGB-D images, in real time. The proposed scheme incorporates a learned, camera-calibrated wave propagation model and a phase regularization prior into its optimization. This unique combination allows for accommodating practical, unfiltered holographic display setups that may be corrupted by various hardware imperfections. Results tested on an unfiltered holographic display reveal that the proposed 3D-HoloNet can achieve 30 fps at full HD for one color channel using a consumer-level GPU while maintaining image quality comparable to iterative methods across multiple focused distances.

3D-HoloNet: fast, unfiltered, 3D hologram generation with camera-calibrated network learning

Wenbin Zhou*, Feifan Qu*, Xiangyu Meny, Zhenyang Li, Yifan Peng (* equal contribution)

OPTICA Optics Letters 2025

Computational holographic displays typically rely on time-consuming iterative computer-generated holographic (CGH) algorithms and bulky physical filters to attain high-quality reconstruction images. This trade-off between inference speed and image quality becomes more pronounced when aiming to realize 3D holographic imagery. This work presents 3D-HoloNet, a deep neural network-empowered CGH algorithm for generating phase-only holograms (POHs) of 3D scenes, represented as RGB-D images, in real time. The proposed scheme incorporates a learned, camera-calibrated wave propagation model and a phase regularization prior into its optimization. This unique combination allows for accommodating practical, unfiltered holographic display setups that may be corrupted by various hardware imperfections. Results tested on an unfiltered holographic display reveal that the proposed 3D-HoloNet can achieve 30 fps at full HD for one color channel using a consumer-level GPU while maintaining image quality comparable to iterative methods across multiple focused distances.